Detector Control System (DCS) for ALICE Front-end electronics

Purpose

This page acts as a knowledge base for parts of the ALICE Detector Control System (DCS), namely the control of the Front-end electronics of several sub-detectors of ALICE. Since we are mainly involved in the TPC Front-end Electronics, this site may be a bit biased. But it may evolve to a more comprehensive data base for other detectors as well.

Overview

The Detector Control System (DCS) is one of the major modules of the ALICE detector. In general, it covers the tasks of controlling the cooling system, the ventilation system, the magnetic fields and other supports as well as the configuration and monitoring of the Front-end electronics. This page will only cover topics related to the latter. More information on the DCS in general can be found at the DCS website.

So far, the following sub-detectors use a number of common components which have been developed in a joint effort:

- Time Projection Chamber (TPC)

- Transition Radiation Detector (TRD)

- Photon Spectrometer (PHOS)

- Forward Multiplicity Detector (FMD)

- EMCAL (new)

They all use the DCS board as controlling node on the hardware level. Thus, the communication software can be (almost) identically. The DCS board is a custom-made board with an Altera FPGA that includes an ARM core, which provides the opportunity to run a light-weight Linux system on the card. The board interfaces to the front-end electronics via a dedicated hardware interface and connects to the higher DCS-layers via the DIM communication framework over Ethernet.

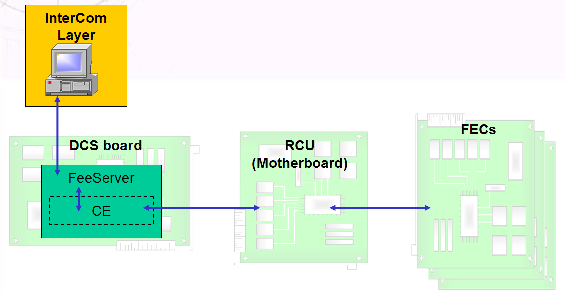

Furthermore, all detectors but the TRD use a similar hardware setup in the front-end electronics: the Front-End Cards are based on the ALTRO chip and served by Readout Control Units (RCUs). Below is a figure exemplarily for the TPC.

Software Architecture

The DCS consist of three main functional layers. From top to bottom this is:

- The Supervisory Layer. The Supervisory Layer provides a user interfaces to the operator. It also communicates with external systems for the LHC

- The Control Layer. The Supervisory Layer communicates with the Control Layer mainly through a LAN network. This layer consists of PCs, PLCs (Programmable Logic Cells) and PLC like devices. The Control Layer collects and processes information from the Field Level, as well as sending commands and information from the Supervisory Layer to the Field Layer. It also connects to the Configuration Database.

- The Field Layer. The Field Layer consists of all field-devices, sensors, actuators and so on. The DCS board and the readout electronics is located in this

layer.

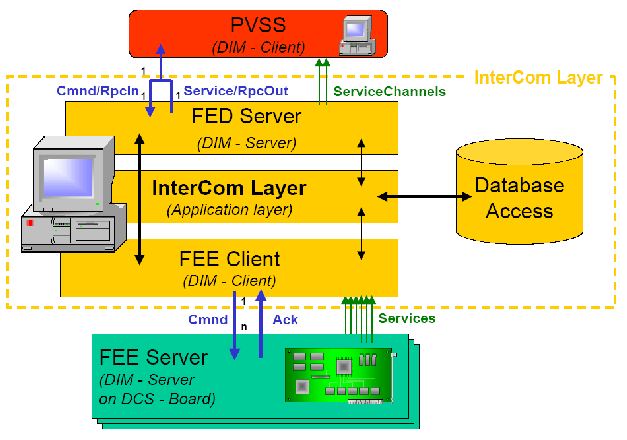

For the ALICE experiment, PVSS II has been chosen as SCADA (Supervisory Control And Data Acquisition) system (i.e. the Supervisory Layer). The PVSS connects to the InterComLayer, a specific communication software acting as the Control Layer and connecting the hardware devices in the Field Layer to the controlling system in the Supervisory Layer. The system uses the communication framework DIM (Distributed Information Management).

To interfaces are defined for the DCS which abstract the underlaying hardware:

- PVSS and InterComLayer communicate through a specific interface, the Front-End-Device (FED), which is common among different sub-detectors within the ALICE experiment. The InterComLayer implements a server which the PVSS can subscribe to as a client.

- Each hardware device implements a Front-End-Electronics-Server (FeeServer), which the InterComLayer subscribes to as a client.

The InterComLayer connects to several FeeServers and pools data before distributing it to the SCADA system. Vice versa the InterComLayer distributes configuration data to the FeeServers. In addition it implements an interface to the Configuration Database containing all specific configuration data for the hardware devices.

Hardware Components

- DCS board

- Readout-Control-Unit

- Frontend Cards

- TRD Readout board

The communication software

Communication Protocol

Communication between all layers is based on the DIM protocol. DIM is an open source communication framework developed at CERN. It provides a network-transparent inter-process communication for distributed and heterogeneous environments. TCP/IP over Ethernet is used as transport layer. A common library for many different operating systems is provided by the framework. DIM implements a client-server relation with two major functionalities.

- Services: The DIM server publishes so called services and provides data through a service. Any DIM client can subscribe to services and monitor their data. The DIM clients get notified about current values via a callback from the DIM server.

- Commands: A DIM server can accept commands from DIM clients. Server and client have to agree on the format of the command. Here applies the same for the possible data types like for the services.

A dedicated DIM name-server takes control over all the running clients, servers and their services available in the system. Each server registers at startup all its services and command channels. For a client the location of a server is transparent. It asks the DIM name-server which server provides a specific service and retrieves the access information. The process then connects directly to the corresponding server. The DIM name-server concept eases a recovery process of the system after update and restart of servers or clients at any time. It enables also fast migration from one machine to another and distributed tasks. Whenever one of the processes (a server or even the name server) in the system crashes or dies all processes connected to it will be notified and will reconnect as soon as it comes back to life.

PVSS

InterComLayer

The InterComLayer takes the task of the Control Layer. It runs independently from the other system layers on a separate machine outside of the radiation area. It provides three interfaces:

- Front-End-Electronics Client (DIM client) to connect to the Field Layer

- Front-End-Device Server (DIM server) to connect to the Supervisory Layer

- Interface to the Configuration Database, using a database client

The FEE interface consists of a command channel, a corresponding acknowledge channel, a message channel and several service channels.

These services are referred to be values of interest like temperature, voltage, currents, etc. of the Front-end electronics. In order to reduce network traffic the FeeServer can apply thresholds for data value changes, so called dead-bands.

Changes are only published if a certain dead-band is exceeded, the dead-band can be defined for each service independently in conformity with the different nature of the data.

The InterComLayer connects to FeeServers according to the configuration.

After the connection is established, the InterComLayer subscribes to the services of the FeeServers and controls their message channels. Filtering of messages according to the log-level is performed on each layer to reduce network traffic.

The service channels of the FeeServers are pooled together and re-published to the supervisory level. By this means the source of the services is transparent to the SCADA system on top.

The InterComLayer is an abstraction layer, which disconnects Supervisory and Field Layer.

In order to transport configuration data to the Front-end electronics, the InterComLayer has an interface to the configuration database. Neither database nor InterComLayer know about the format of the data. The data will be handled as BLOBs (Binary Large OBjects). The database contains entries of configuration packages. For each specific configuration of the Front-end electronics it creates a descriptor which refers to the required configuration packages. The Supervisory Layer sends a request to load a certain configuration to the InterComLayer, which then fetches the corresponding BLOB from the configuration database and transports it through the command channel to the FeeServers.

In addition, the InterComLayer provides functionality for maintenance and control of the FeeServers. Servers can be updated, restarted and their controlling properties can be adjusted to any requirements.

For further details go to the InterCom Layer page.

Compiliation and Installation

It exists a dedicated description for compiling and installing the InterComLayer installation.

The Front-End-Electronics-Server

The DCS for the Front-end elctronics is based on so called Front-End-Electronics-Servers (FeeServers), which are running on the DCS board close to the hardware. A FeeServer abstracts the underlying Front-end electronics to a certain degree and covers the following tasks:

- Interfacing hardware data sources and publishing data

- Receiving of commands and configuration data for controlling the Front-end electronics

- Self-tests and Watchdogs (consistency check and setting of parameters)

In order to keep development simple and the software re-usable for different hardware setups the FeeServer has been splitted into a device independent core and an interface to the hardware dependent functionality.

It exists a dedicated description for compiling and installing the FeeServer, as well as some hints and guidelines for developing an own ControlEngine (CE) for the FeeServer.

The FeeServer core

The core of the FeeServer is device-independent. It provides general communication functionality, remote control and update of the whole FeeServer application. Some features are related to the configuration of the data publishing. In order to reduce network traffic, variable deadbands have been introduced. Data is only updated if the variation exceeds the deadband. The core can be used for different devices, i.e. different detectors of the ALICE experiment. The device-dependent actions are adapted for each specific device and are executed in separated threads. This makes a controlled execution possible. If execution is stuck, the server core is still running independently. It can kill and clean up the stuck thread and notify the upper layers.

The ControlEngine (CE)

The ControlEngine implements the device dependent functionality of the FeeServer. The CE provides data access in order to monitor data points and executes received commands specific for the underlying hardware. An abstract interface between FeeServer and CE is defined, the ControlEngine implements interface methods for initializing, cleaning up and command execution. It has contact to the specific bus systems of the hardware devices. The access is ancapsulated in Linux device drivers. This makes the functionality of the CE independent from the hardware/firmware version.

One example is the CE for the TPC detector and the RCU. Please check the dedicated site RCU ControlEngine.

DCS board tools

This sections covers the DCS board software for the TPC-like detectors (so far TPC, PHOS, most likely FMD and EMCAL). Apart from the mentioned FeeServer there are some low level tools and interfaces. In fact, the FeeServer uses them. ... read more.

Specifications

Front-End-Device interface

The FED API is common among all detectors in ALICE. It defines the interface between the FedServer(s) and the PVSS system of one detector. A more detailed description you will find in the document itself.

This is the version currently (03.02.2006) also available at the DCS pages: FED API v2.0 (draft)

Front-End-Electronics interface

Control Engine interface

Format of TPC configuration data

Links

- The ALICE TPC Front End Electronics

- ALICE Detector Control System group

- The DCS board pages

- ARM linux on the DCS board

- FeeCom Software

- Distributed Information Management System (DIM)